You are viewing documentation for KubeSphere version:v3.0.0

KubeSphere v3.0.0 documentation is no longer actively maintained. The version you are currently viewing is a static snapshot. For up-to-date documentation, see the latest version.

Import an AWS EKS Cluster

This tutorial demonstrates how to import an AWS EKS cluster through the direct connection method. If you want to use the agent connection method, refer to Agent Connection.

Prerequisites

- You have a Kubernetes cluster with KubeSphere installed, and prepared this cluster as the Host Cluster. For more information about how to prepare a Host Cluster, refer to Prepare a Host Cluster.

- You have an EKS cluster to be used as the Member Cluster.

Import an EKS Cluster

Step 1: Deploy KubeSphere on your EKS Cluster

You need to deploy KubeSphere on your EKS cluster first. For more information about how to deploy KubeSphere on EKS, refer to Deploy KubeSphere on AWS EKS.

Step 2: Prepare the EKS Member Cluster

-

In order to manage the Member Cluster from the Host Cluster, you need to make

jwtSecretthe same between them. Therefore, get it first by executing the following command on your Host Cluster.kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecretThe output is similar to the following:

jwtSecret: "QVguGh7qnURywHn2od9IiOX6X8f8wK8g" -

Log in to the KubeSphere console of the EKS cluster as

admin. Click Platform in the upper left corner and then select Clusters Management. -

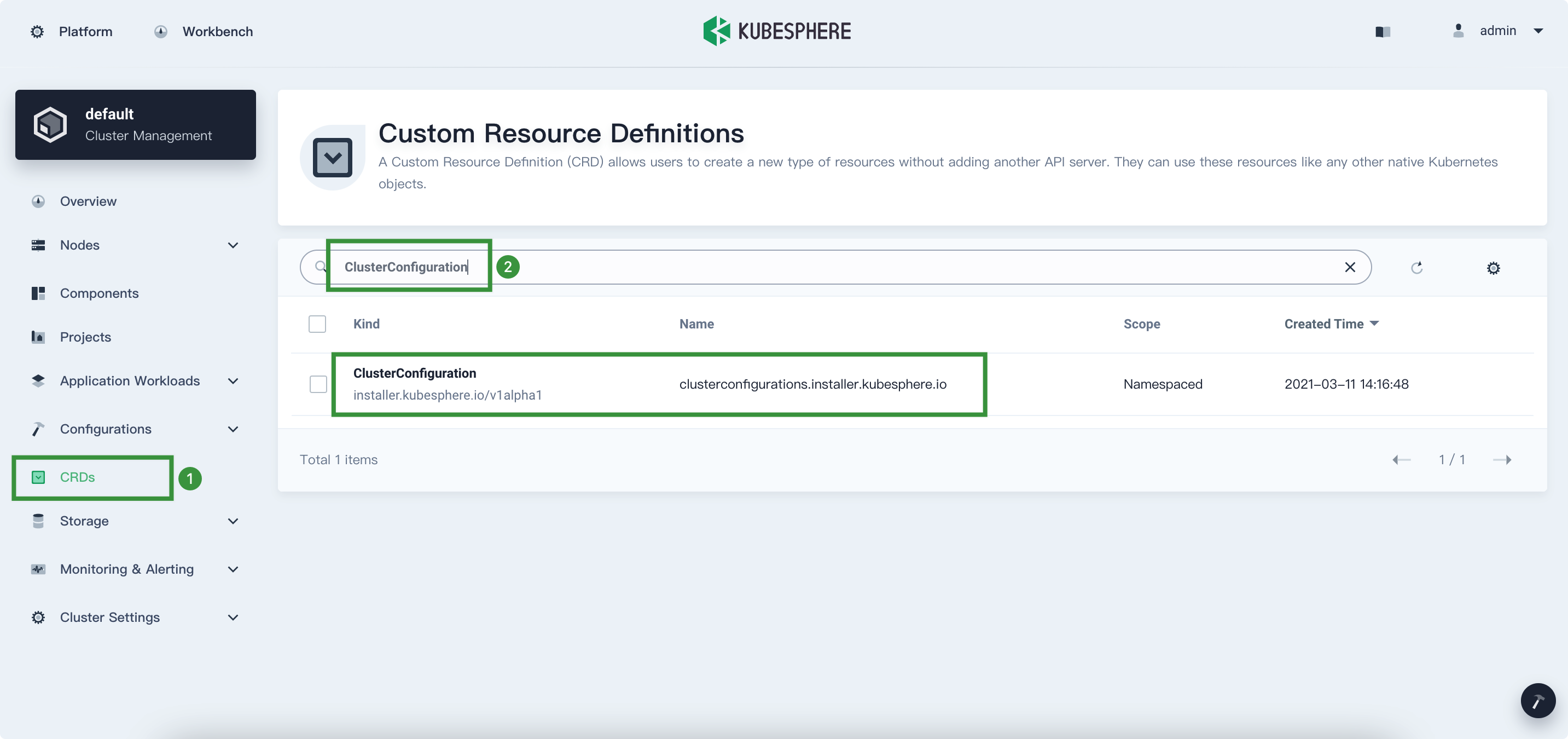

Go to CRDs, input

ClusterConfigurationin the search bar, and then press Enter on your keyboard. Click ClusterConfiguration to go to its detail page.

-

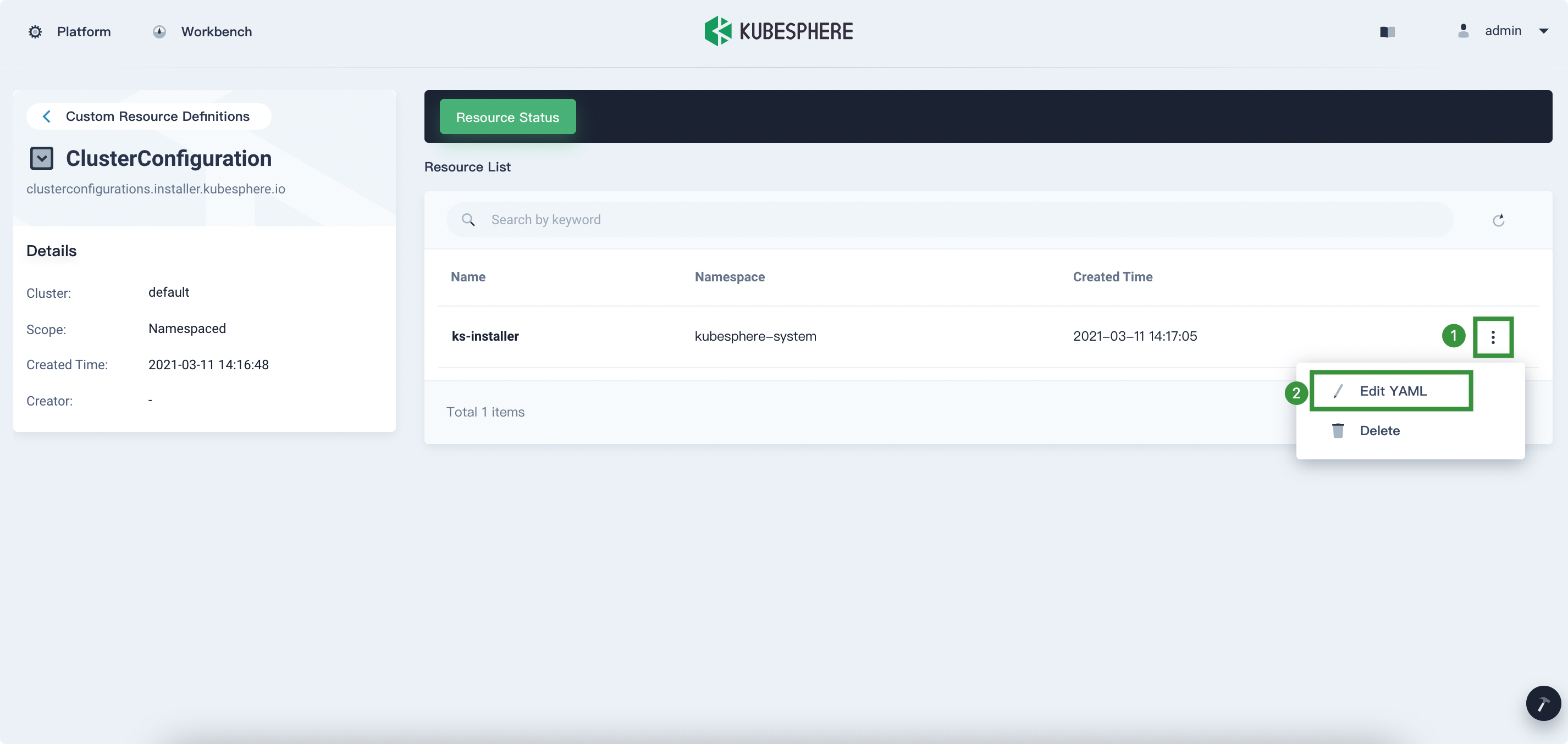

Click the three dots on the right and then select Edit YAML to edit

ks-installer.

-

In the YAML file of

ks-installer, change the value ofjwtSecretto the corresponding value shown above and set the value ofclusterRoletomember. Click Update to save your changes.authentication: jwtSecret: QVguGh7qnURywHn2od9IiOX6X8f8wK8gmulticluster: clusterRole: memberNote

Make sure you use the value of your ownjwtSecret. You need to wait for a while so that the changes can take effect.

Step 3: Create a new kubeconfig file

-

Amazon EKS doesn’t provide a built-in kubeconfig file as a standard kubeadm cluster does. Nevertheless, you can create a kubeconfig file by referring to this document. The generated kubeconfig file will be like the following:

apiVersion: v1 clusters: - cluster: server: <endpoint-url> certificate-authority-data: <base64-encoded-ca-cert> name: kubernetes contexts: - context: cluster: kubernetes user: aws name: aws current-context: aws kind: Config preferences: {} users: - name: aws user: exec: apiVersion: client.authentication.k8s.io/v1alpha1 command: aws args: - "eks" - "get-token" - "--cluster-name" - "<cluster-name>" # - "--role" # - "<role-arn>" # env: # - name: AWS_PROFILE # value: "<aws-profile>"However, this automatically generated kubeconfig file requires the command

aws(aws CLI tools) to be installed on every computer that wants to use this kubeconfig. -

Run the following commands on your local computer to get the token of the ServiceAccount

kubespherecreated by KubeSphere. It has the cluster admin access to the cluster and will be used as the new kubeconfig token.TOKEN=$(kubectl -n kubesphere-system get secret $(kubectl -n kubesphere-system get sa kubesphere -o jsonpath='{.secrets[0].name}') -o jsonpath='{.data.token}' | base64 -d) kubectl config set-credentials kubesphere --token=${TOKEN} kubectl config set-context --current --user=kubesphere -

Retrieve the new kubeconfig file by running the following command:

cat ~/.kube/configThe output is similar to the following and you can see that a new user

kubesphereis inserted and set as the current-context user:apiVersion: v1 clusters: - cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZ...S0tLQo= server: https://*.sk1.cn-north-1.eks.amazonaws.com.cn name: arn:aws-cn:eks:cn-north-1:660450875567:cluster/EKS-LUSLVMT6 contexts: - context: cluster: arn:aws-cn:eks:cn-north-1:660450875567:cluster/EKS-LUSLVMT6 user: kubesphere name: arn:aws-cn:eks:cn-north-1:660450875567:cluster/EKS-LUSLVMT6 current-context: arn:aws-cn:eks:cn-north-1:660450875567:cluster/EKS-LUSLVMT6 kind: Config preferences: {} users: - name: arn:aws-cn:eks:cn-north-1:660450875567:cluster/EKS-LUSLVMT6 user: exec: apiVersion: client.authentication.k8s.io/v1alpha1 args: - --region - cn-north-1 - eks - get-token - --cluster-name - EKS-LUSLVMT6 command: aws env: null - name: kubesphere user: token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImlCRHF4SlE5a0JFNDlSM2xKWnY1Vkt5NTJrcDNqRS1Ta25IYkg1akhNRmsifQ.eyJpc3M................9KQtFULW544G-FBwURd6ArjgQ3Ay6NHYWZe3gWCHLmag9gF-hnzxequ7oN0LiJrA-al1qGeQv-8eiOFqX3RPCQgbybmix8qw5U6f-Rwvb47-xAYou can run the following command to check that the new kubeconfig does have access to the EKS cluster.

kubectl get nodesThe output is simialr to this:

NAME STATUS ROLES AGE VERSION ip-10-0-47-38.cn-north-1.compute.internal Ready <none> 11h v1.18.8-eks-7c9bda ip-10-0-8-148.cn-north-1.compute.internal Ready <none> 78m v1.18.8-eks-7c9bda

Step 4: Import the EKS Member Cluster

-

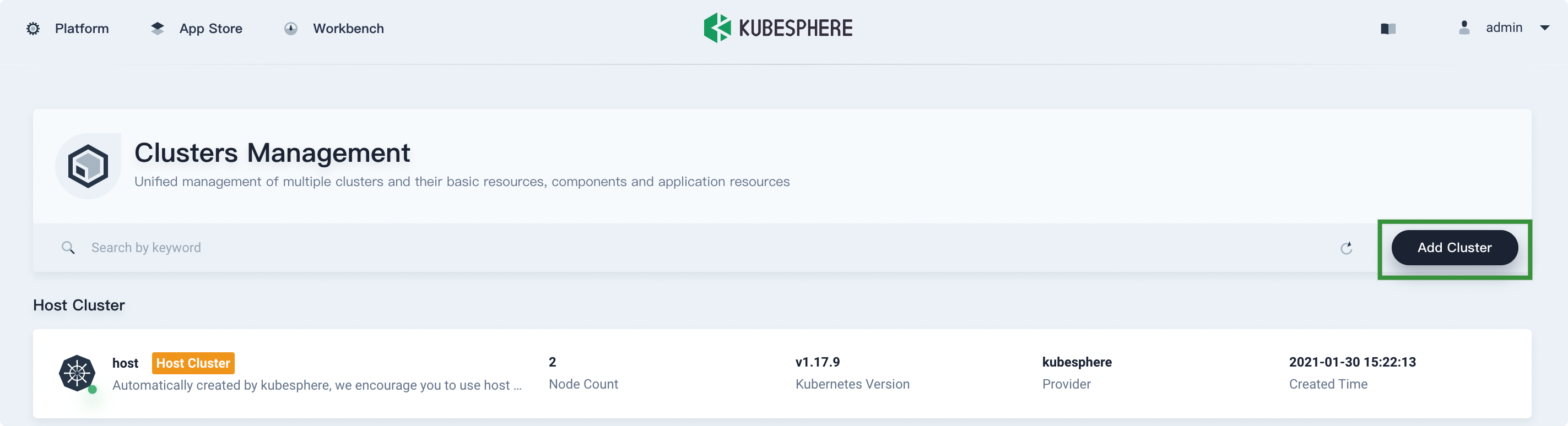

Log in to the KubeSphere console on your Host Cluster as

admin. Click Platform in the upper left corner and then select Clusters Management. On the Clusters Management page, click Add Cluster.

-

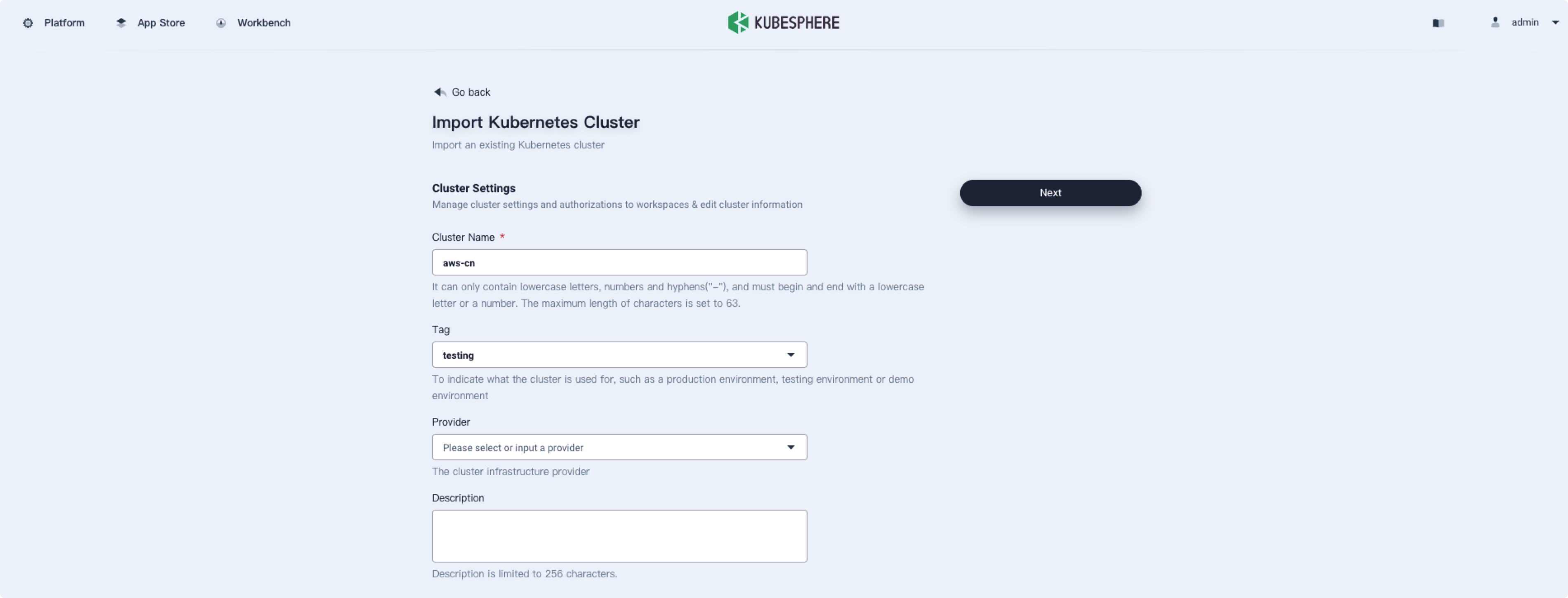

Input the basic information based on your needs and click Next.

-

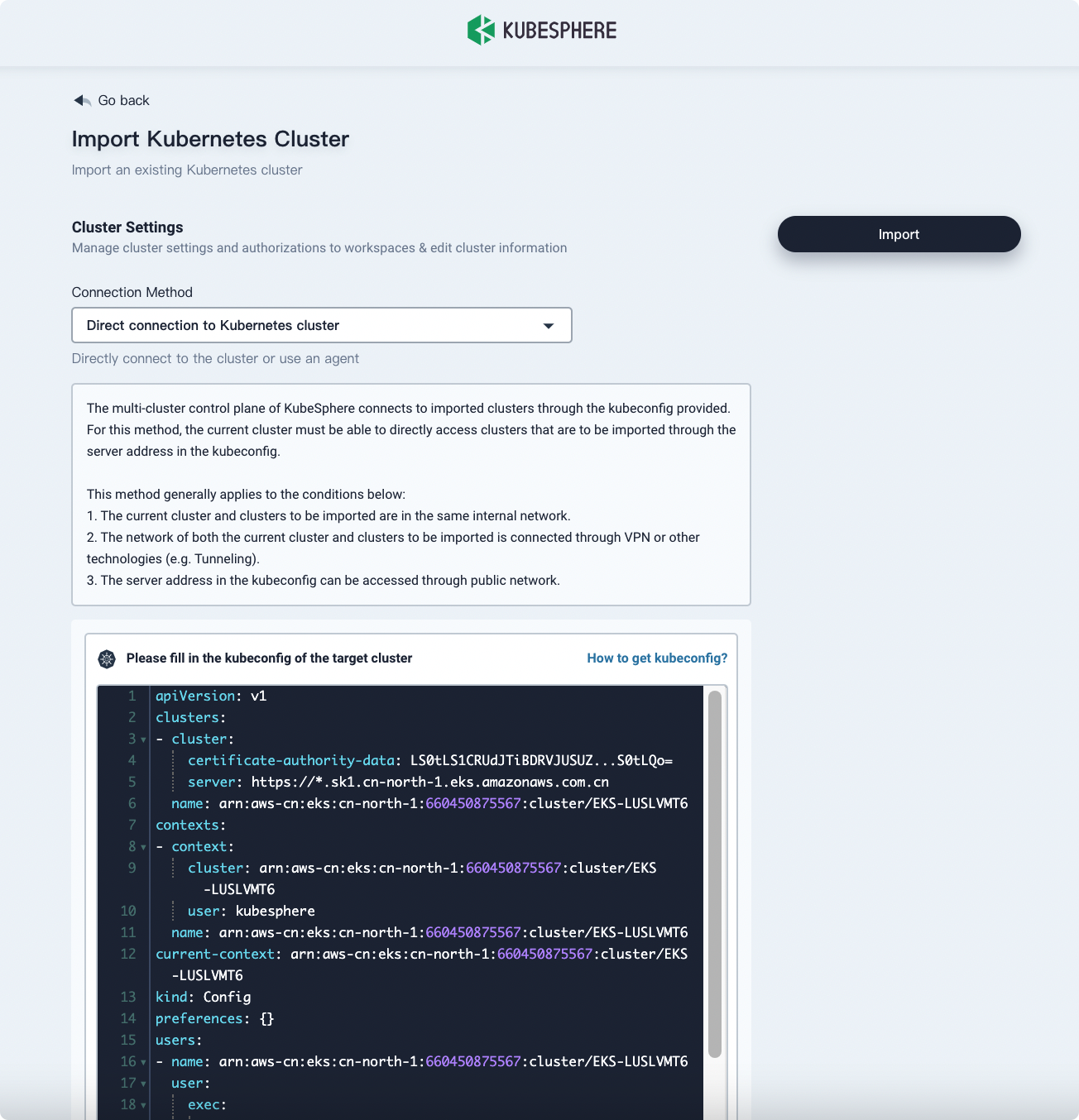

In Connection Method, select Direct connection to Kubernetes cluster. Fill in the new kubeconfig file of the EKS Member Cluster and then click Import.

-

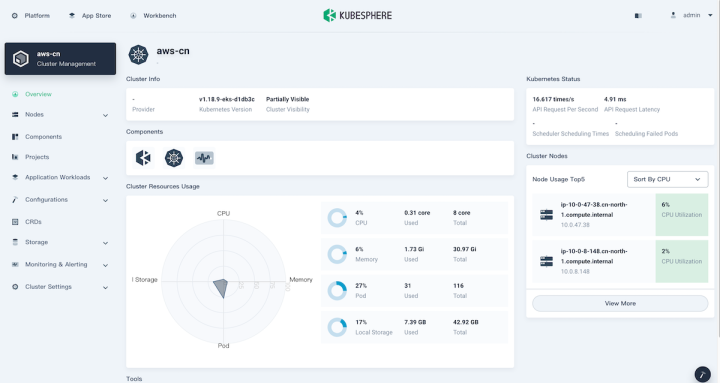

Wait for cluster initialization to finish.

Previous

Previous