You are viewing documentation for KubeSphere version:v3.0.0

KubeSphere v3.0.0 documentation is no longer actively maintained. The version you are currently viewing is a static snapshot. For up-to-date documentation, see the latest version.

Persistent Volumes and Storage Classes

This tutorial describes the basic concepts of PVs, PVCs and storage classes and demonstrates how a cluster administrator can manage storage classes and persistent volumes in KubeSphere.

Introduction

A PersistentVolume (PV) is a piece of storage in the cluster that has been provisioned by an administrator or dynamically provisioned using Storage Classes. PVs are volume plugins like Volumes, but have a lifecycle independent of any individual Pod that uses the PV. PVs can be provisioned either statically or dynamically.

A PersistentVolumeClaim (PVC) is a request for storage by a user. It is similar to a Pod. Pods consume node resources and PVCs consume PV resources.

KubeSphere supports dynamic volume provisioning based on storage classes to create PVs.

A StorageClass provides a way for administrators to describe the classes of storage they offer. Different classes might map to quality-of-service levels, or to backup policies, or to arbitrary policies determined by the cluster administrators. Each StorageClass has a provisioner that determines what volume plugin is used for provisioning PVs. This field must be specified. For which value to use, please read the official Kubernetes documentation or check with your storage administrator.

The table below summarizes common volume plugins for various provisioners (storage systems).

| Type | Description |

|---|---|

| In-tree | Built-in and run as part of Kubernetes, such as RBD and Glusterfs. For more plugins of this kind, see Provisioner. |

| External-provisioner | Deployed independently from Kubernetes, but works like an in-tree plugin, such as nfs-client. For more plugins of this kind, see External Storage. |

| CSI | Container Storage Interface, a standard for exposing storage resources to workloads on COs (for example, Kubernetes), such as QingCloud-csi and Ceph-CSI. For more plugins of this kind, see Drivers. |

Prerequisites

You need an account granted a role including the authorization of Clusters Management. For example, you can log in to the console as admin directly or create a new role with the authorization and assign it to an account.

Manage Storage Classes

-

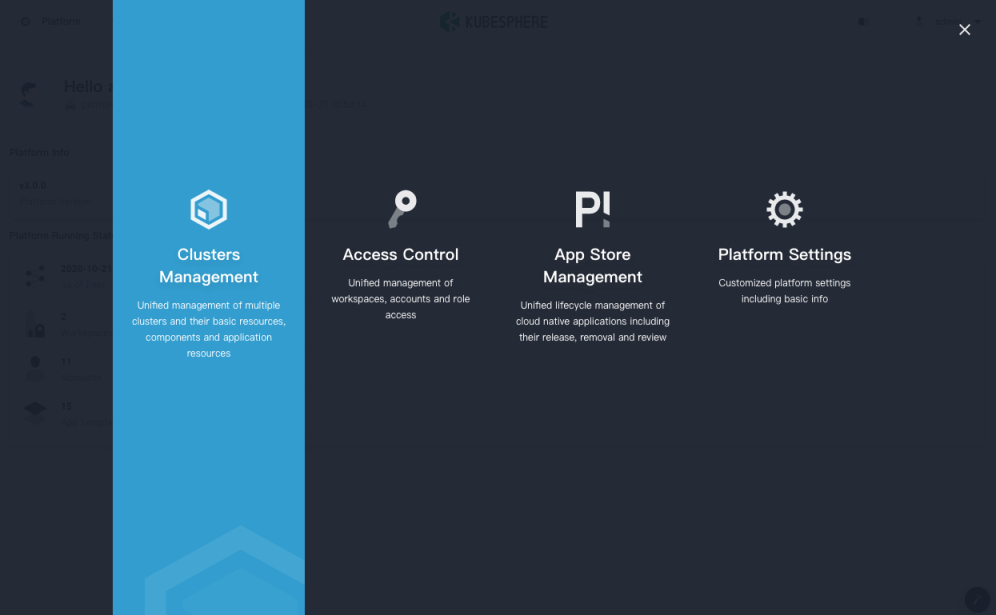

Click Platform in the top left corner and select Clusters Management.

-

If you have enabled the multi-cluster feature with member clusters imported, you can select a specific cluster. If you have not enabled the feature, refer to the next step directly.

-

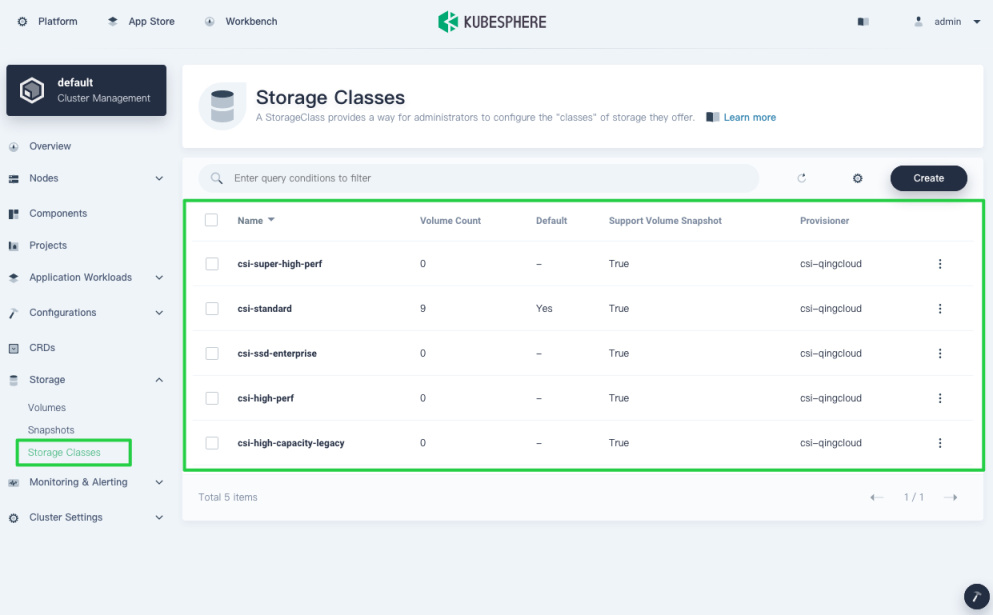

On the Cluster Management page, navigate to Storage Classes under Storage, where you can create, update and delete a storage class.

-

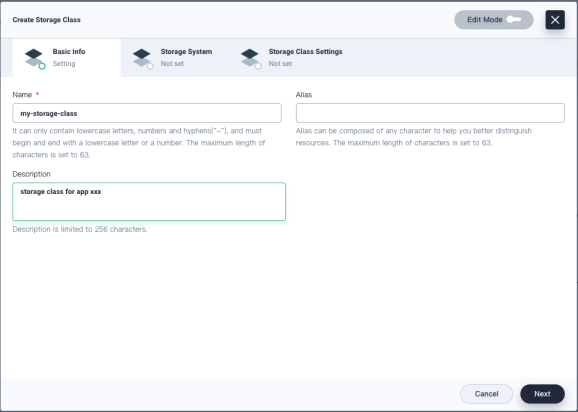

To create a storage class, click Create and enter the basic information in the pop-up window. When you finish, click Next.

-

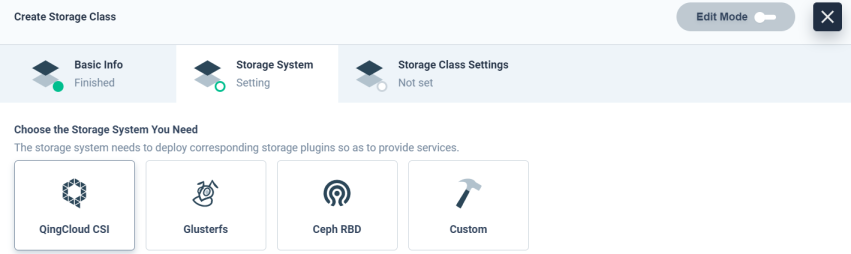

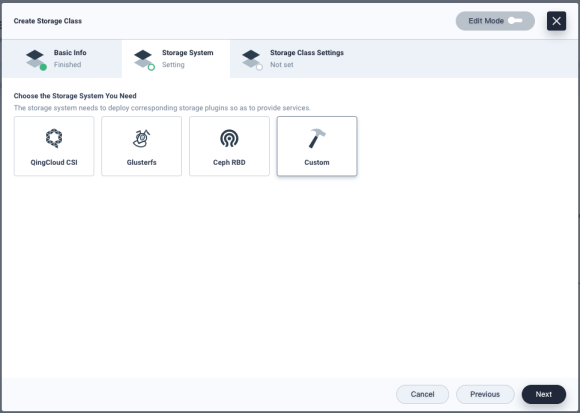

In KubeSphere, you can create storage classes for

QingCloud-CSI,GlusterfsandCeph RBDdirectly. Alternatively, you can also create customized storage classes for other storage systems based on your needs. Select a type and click Next.

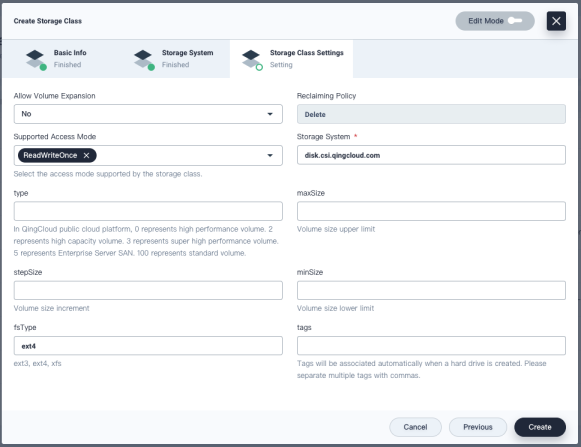

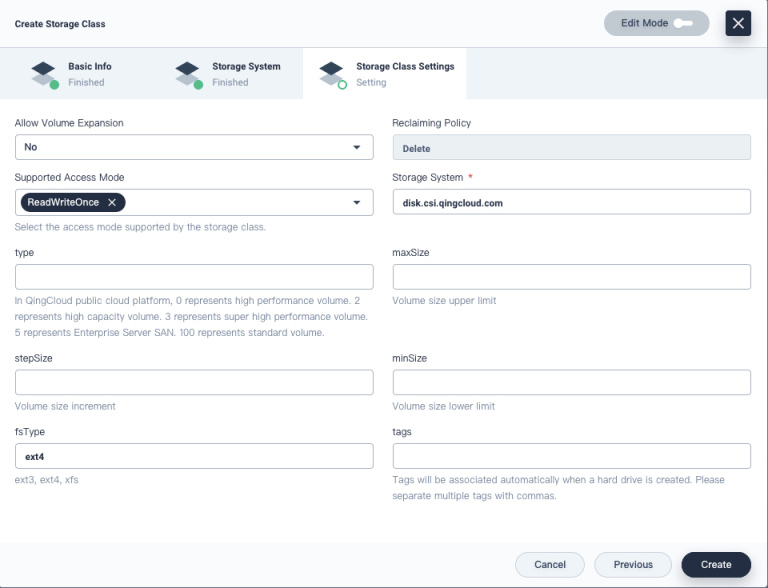

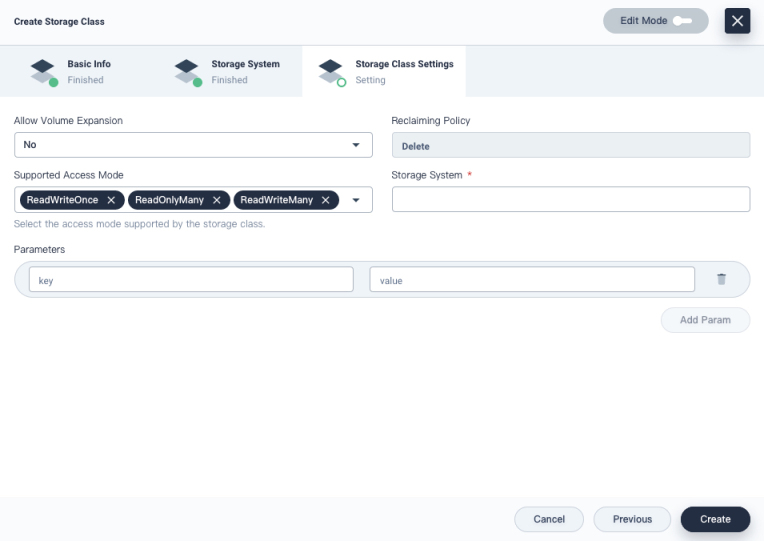

Common settings

Some settings are commonly used and shared among storage classes. You can find them as dashboard properties on the console, which are also indicated by fields or annotations in the StorageClass manifest. You can see the manifest file in YAML format by enabling Edit Mode in the top right corner. Here are property descriptions of some commonly used fields in KubeSphere.

| Property | Description |

|---|---|

| Allow Volume Expansion | Specified by allowVolumeExpansion in the manifest. When it is set to true, PVs can be configured to be expandable. For more information, see Allow Volume Expansion. |

| Reclaiming Policy | Specified by reclaimPolicy in the manifest. It can be set to Delete or Retain (default). For more information, see Reclaim Policy. |

| Storage System | Specified by provisioner in the manifest. It determines what volume plugin is used for provisioning PVs. For more information, see Provisioner. |

| Supported Access Mode | Specified by metadata.annotations[storageclass.kubesphere.io/supported-access-modes] in the manifest. It tells KubeSphere which access mode is supported. |

For other settings, you need to provide different information for different storage plugins, which, in the manifest, are always indicated under the field parameters. They will be described in detail in the sections below. You can also refer to Parameters in the official documentation of Kubernetes.

QingCloud CSI

QingCloud CSI is a CSI plugin on Kubernetes for the storage service of QingCloud. Storage classes of QingCloud CSI can be created on the KubeSphere console.

Prerequisites

- QingCloud CSI can be used on both public cloud and private cloud of QingCloud. Therefore, make sure KubeSphere has been installed on either of them so that you can use cloud storage services.

- QingCloud CSI Plugin has been installed on your KubeSphere cluster. See QingCloud-CSI Installation for more information.

Settings

| Property | Description |

|---|---|

| type | On the QingCloud platform, 0 represents high performance volumes. 2 represents high capacity volumes. 3 represents super high performance volumes. 5 represents Enterprise Server SAN. 6 represents NeonSan HDD. 100 represents standard volumes. 200 represents enterprise SSD. |

| maxSize | The volume size upper limit. |

| stepSize | The volume size increment. |

| minSize | The volume size lower limit. |

| fsType | Filesystem type of the volume: ext3, ext4 (default), xfs. |

| tags | The ID of QingCloud Tag resource, split by commas. |

More storage class parameters can be seen in QingCloud-CSI user guide.

Glusterfs

Glusterfs is an in-tree storage plugin on Kubernetes, which means you don’t need to install a volume plugin additionally.

Prerequisites

The Glusterfs storage system has already been installed. See GlusterFS Installation Documentation for more information.

Settings

| Property | Description |

|---|---|

| resturl | The Gluster REST service/Heketi service url which provision gluster volumes on demand. |

| clusterid | The ID of the cluster which will be used by Heketi when provisioning the volume. |

| restauthenabled | Gluster REST service authentication boolean that enables authentication to the REST server. |

| restuser | The Glusterfs REST service/Heketi user who has access to create volumes in the Glusterfs Trusted Pool. |

| secretNamespace, secretName | The Identification of Secret instance that contains user password to use when talking to Gluster REST service. |

| gidMin, gidMax | The minimum and maximum value of GID range for the StorageClass. |

| volumetype | The volume type and its parameters can be configured with this optional value. |

For more information about StorageClass parameters, see Glusterfs in Kubernetes Documentation.

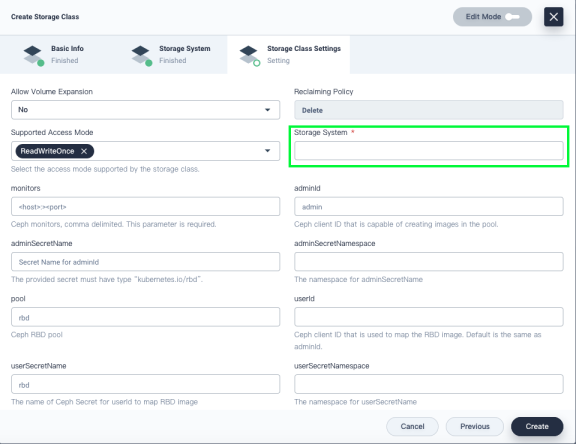

Ceph RBD

Ceph RBD is also an in-tree storage plugin on Kubernetes. The volume plugin is already in Kubernetes, but the storage server must be installed before you create the storage class of Ceph RBD.

As hyperkube images were deprecated since 1.17, in-tree Ceph RBD may not work without hyperkube.

Nevertheless, you can use rbd provisioner as a substitute, whose format is the same as in-tree Ceph RBD. The only different parameter is provisioner (i.e Storage System on the KubeSphere console). If you want to use rbd-provisioner, the value of provisioner must be ceph.com/rbd (Input this value in Storage System in the image below). If you use in-tree Ceph RBD, the value must be kubernetes.io/rbd.

Prerequisites

- The Ceph server has already been installed. See Ceph Installation Documentation for more information.

- Install the plugin if you choose to use rbd-provisioner. Community developers provide charts for rbd provisioner that you can use to install rbd-provisioner by helm.

Settings

| Property | Description |

|---|---|

| monitors | The Ceph monitors, comma delimited. |

| adminId | The Ceph client ID that is capable of creating images in the pool. |

| adminSecretName | The Secret Name for adminId. |

| adminSecretNamespace | The namespace for adminSecretName. |

| pool | The Ceph RBD pool. |

| userId | The Ceph client ID that is used to map the RBD image. |

| userSecretName | The name of Ceph Secret for userId to map RBD image. |

| userSecretNamespace | The namespace for userSecretName. |

| fsType | The fsType that is supported by Kubernetes. |

| imageFormat | The Ceph RBD image format, 1 or 2. |

| imageFeatures | This parameter is optional and should only be used if you set imageFormat to 2. |

For more information about StorageClass parameters, see Ceph RBD in Kubernetes Documentation.

Custom storage classes

You can create custom storage classes for your storage systems if they are not directly supported by KubeSphere. The following example shows you how to create a storage class for NFS on the KubeSphere console.

NFS Introduction

NFS (Net File System) is widely used on Kubernetes with the external-provisioner volume plugin nfs-client. You can create the storage class of nfs-client by clicking Custom in the image below.

Prerequisites

- An available NFS server.

- The volume plugin nfs-client has already been installed. Community developers provide charts for nfs-client that you can use to install nfs-client by helm.

Common Settings

| Property | Description |

|---|---|

| Storage System | Specified by provisioner in the manifest. If you install the storage class by charts for nfs-client, it can be cluster.local/nfs-client-nfs-client-provisioner. |

| Allow Volume Expansion | Specified by allowVolumeExpansion in the manifest. Select No. |

| Reclaiming Policy | Specified by reclaimPolicy in the manifest. The value is Delete by default. |

| Supported Access Mode | Specified by .metadata.annotations.storageclass.kubesphere.io/supported-access-modes in the manifest. ReadWriteOnce, ReadOnlyMany and ReadWriteMany all are selected by default. |

Parameters

| Key | Description | Value |

|---|---|---|

| archiveOnDelete | Archive pvc when deleting | true |

Manage Volumes

Once the storage class is created, you can create volumes with it. You can list, create, update and delete volumes in Volumes under Storage on the KubeSphere console. For more details, please see Volume Management.

Previous

Previous